-

Server

Hãng sản xuấtTheo cấu hình

Hãng sản xuấtTheo cấu hình -

Workstation

Hãng sản xuấtWorkstationDeep Learning

Hãng sản xuấtWorkstationDeep Learning -

Storage

Hãng sản xuất

Hãng sản xuất -

Parts

Hãng sản xuấtTheo dòng máy chủ

-

Networking

Hãng sản xuất

Hãng sản xuất - License

-

Giải pháp

- Blog

-

Thông tin

Về chúng tôi

- Liên hệ

Danh mục

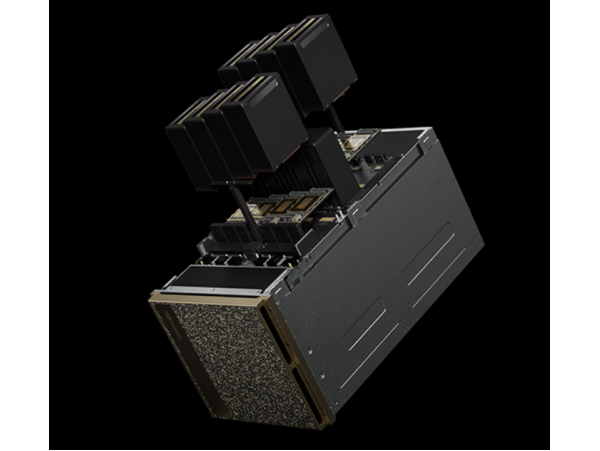

Máy chủ trí tuệ nhân tạo NVIDIA DGX B200

- Nhà sản xuất: NVIDIA

- Mã sản phẩm/Part No. TM9576 / DGX B200

- Tình trạng: Đặt trước

Giá bán: Call

Không muốn mua? Bạn có thể THUÊ máy chủ này!

Vui lòng liên hệ NV KD để biết chi tiết.

Vui lòng liên hệ NV KD để biết chi tiết.

NVKD sẽ liên hệ lại ngay

Thông tin sản phẩm Máy chủ trí tuệ nhân tạo NVIDIA DGX B200

The foundation for your AI center of excellence.

NVIDIA DGX™ B200 is an unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey. Equipped with eight NVIDIA B200 Tensor Core GPUs interconnected with fifth-generation NVIDIA® NVLink®, DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations. Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots—making it ideal for businesses looking to accelerate their AI transformation.

→ Giới thiệu chi tiết NVIDIA DGX B200

SPECIFICATIONS

| GPU | 8x NVIDIA B200 Tensor Core GPUs |

| GPU Memory | 1,440GB total GPU memory |

| Performance | 72 petaFLOPS training and 144 petaFLOPS inference |

| Power Consumption | ~14.3kW max |

| CPU | 2 Intel® Xeon® Platinum 8570 Processors 112 Cores total, 2.1 GHz (Base), 4 GHz (Max Boost) |

| System Memory | Up to 4TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI

|

| Management Network | 10Gb/s onboard NIC with RJ45 100Gb/s dual-port ethernet NIC Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2 Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise: Optimized AI Software NVIDIA Base Command™: Orchestration, Scheduling, and Cluster Management DGX OS / Ubuntu: Operating system |

| Rack Units (RU) | 10 RU |

| System Dimensions | Height: 17.5in (444mm) Width: 19.0in (482.2mm) Length: 35.3in (897.1mm |

| Operating Temperature | 5–30°C (41–86°F) |

| Enterprise Support | Three-year Enterprise Business-Standard Support for hardware and software 24/7 Enterprise Support portal access Live agent support during local business hours |

Xem thêm

Sản phẩm liên quan(4)

TƯ VẤN - MUA HÀNG - BẢO HÀNH

Văn phòng chính Hồ Chí Minh

Văn phòng chính Hồ Chí Minh

+84 2854 333 338 - Fax: +84 28 54 319 903

Chi nhánh NTC Đà Nẵng

Chi nhánh NTC Đà Nẵng

+84 236 3572 189 - Fax: +84 236 3572 223

Chi nhánh NTC Hà Nội

Chi nhánh NTC Hà Nội

+84 2437 737 715 - Fax: +84 2437 737 716

Copyright © 2017 thegioimaychu.vn. All Rights Reserved.